Using OAuth2 proxy for Kubernetes Dashboard

OAuth2-proxy was once a bit.ly project, but was officially archived in Sept 2018. It lives on though, at https://github.com/oauth2-proxy/oauth2-proxy.

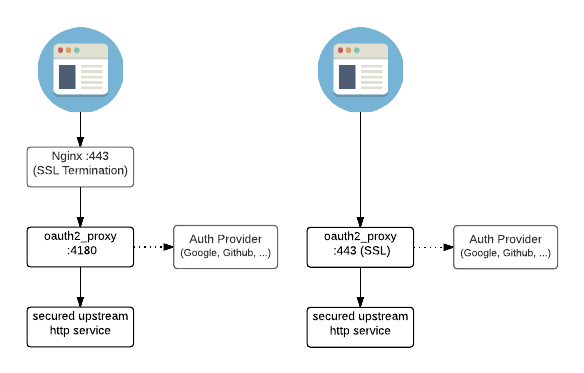

OAuth2-proxy is a lightweight proxy which you put in front of your vulnerable services, enforcing an OAuth authentication against an impressive collection of providers (including generic OIDC) before the backend service is displayed to the calling user.

This recipe will describe setting up OAuth2 Proxy for the purposes of passing authentication headers to Kubernetes Dashboard, which doesn't provide its own authentication, but instead relies on Kubernetes' own RBAC auth.

In order to view your Kubernetes resources on the dashboard, you either create a fully-privileged service account (yuk! ), copy and paste your own auth token upon login (double yuk!

), or use OAuth2 Proxy to authenticate against the kube-apiserver on your behalf, and pass the authentication token to Kubernetes Dashboard (like a boss!

)

If you're after a generic authentication middleware which doesn't need to pass OAuth headers, then Traefik Forward Auth is a better option, since it supports multiple backends in "auth host" mode.

OAuth2 Proxy requirements

Ingredients

Already deployed:

- A Kubernetes cluster, configured for OIDC authentication against a supported provider

- Flux deployment process bootstrapped

- An Ingress controller to route incoming traffic to services

Optional:

- External DNS to create an DNS entry the "flux" way

- Persistent storage if you want to use Redis for session persistence

Preparation

OAuth2 Proxy Namespace

We need a namespace to deploy our HelmRelease and associated YAMLs into. Per the flux design, I create this example yaml in my flux repo at /bootstrap/namespaces/namespace-kubernetes-dashboard.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

OAuth2 Proxy HelmRepository

We're going to install the OAuth2 Proxy helm chart from the oauth2-proxy repository, so I create the following in my flux repo (assuming it doesn't already exist):

apiVersion: source.toolkit.fluxcd.io/v1beta1

kind: HelmRepository

metadata:

name: oauth2-proxy

namespace: flux-system

spec:

interval: 15m

url: https://oauth2-proxy.github.io/manifests/

OAuth2 Proxy Kustomization

Now that the "global" elements of this deployment (just the HelmRepository in this case) have been defined, we do some "flux-ception", and go one layer deeper, adding another Kustomization, telling flux to deploy any YAMLs found in the repo at /kubernetes-dashboard/. I create this example Kustomization in my flux repo:

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

name: oauth2-proxy

namespace: flux-system

spec:

interval: 30m

path: ./kubernetes-dashboard

prune: true # remove any elements later removed from the above path

timeout: 10m # if not set, this defaults to interval duration, which is 1h

sourceRef:

kind: GitRepository

name: flux-system

healthChecks:

- apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

name: oauth2-proxy

namespace: kubernetes-dashboard

Fast-track your fluxing! 🚀

Is crafting all these YAMLs by hand too much of a PITA?

I automatically and instantly share (with my sponsors) a private "premix" git repository, which includes an ansible playbook to auto-create all the necessary files in your flux repository, for each chosen recipe!

Let the machines do the TOIL!

OAuth2 Proxy HelmRelease

Lastly, having set the scene above, we define the HelmRelease which will actually deploy oauth2-proxy into the cluster. We start with a basic HelmRelease YAML, like this example:

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: oauth2-proxy

namespace: kubernetes-dashboard

spec:

chart:

spec:

chart: oauth2-proxy

version: 6.18.x # auto-update to semver bugfixes only (1)

sourceRef:

kind: HelmRepository

name: oauth2-proxy

namespace: flux-system

interval: 15m

timeout: 5m

releaseName: oauth2-proxy

values: # paste contents of upstream values.yaml below, indented 4 spaces (2)

- I like to set this to the semver minor version of the OAuth2 Proxy current helm chart, so that I'll inherit bug fixes but not any new features (since I'll need to manually update my values to accommodate new releases anyway)

- Paste the full contents of the upstream values.yaml here, indented 4 spaces under the

values:key

If we deploy this helmrelease as-is, we'll inherit every default from the upstream OAuth2 Proxy helm chart. That's probably hardly ever what we want to do, so my preference is to take the entire contents of the OAuth2 Proxy helm chart's values.yaml, and to paste these (indented), under the values key. This means that I can then make my own changes in the context of the entire values.yaml, rather than cherry-picking just the items I want to change, to make future chart upgrades simpler.

Why not put values in a separate ConfigMap?

Didn't you previously advise to put helm chart values into a separate ConfigMap?

Yes, I did. And in practice, I've changed my mind.

Why? Because having the helm values directly in the HelmRelease offers the following advantages:

- If you use the YAML extension in VSCode, you'll see a full path to the YAML elements, which can make grokking complex charts easier.

- When flux detects a change to a value in a HelmRelease, this forces an immediate reconciliation of the HelmRelease, as opposed to the ConfigMap solution, which requires waiting on the next scheduled reconciliation.

- Renovate can parse HelmRelease YAMLs and create PRs when they contain docker image references which can be updated.

- In practice, adapting a HelmRelease to match upstream chart changes is no different to adapting a ConfigMap, and so there's no real benefit to splitting the chart values into a separate ConfigMap, IMO.

Then work your way through the values you pasted, and change any which are specific to your configuration.

Configure OAuth2 Proxy

The following sections detail suggested changes to the values pasted into /kubernetes-dashboard/helmrelease-oauth2-proxy.yaml from the OAuth2 Proxy helm chart's values.yaml. The values are already indented correctly to be copied, pasted into the HelmRelease, and adjusted as necessary.

OAuth2 Proxy Config

Set your clientID and clientSecret to match what you've setup in your OAuth provider. You can choose whatever you like for your cookieSecret!

config:

# Add config annotations

annotations: {}

# OAuth client ID

clientID: "XXXXXXX"

# OAuth client secret

clientSecret: "XXXXXXXX"

# Create a new secret with the following command

# openssl rand -base64 32 | head -c 32 | base64

# Use an existing secret for OAuth2 credentials (see secret.yaml for required fields)

# Example:

# existingSecret: secret

cookieSecret: "XXXXXXXXXXXXXXXX"

# The name of the cookie that oauth2-proxy will create

# If left empty, it will default to the release name

cookieName: ""

google: {}

# adminEmail: xxxx

# useApplicationDefaultCredentials: true

# targetPrincipal: xxxx

# serviceAccountJson: xxxx

# Alternatively, use an existing secret (see google-secret.yaml for required fields)

# Example:

# existingSecret: google-secret

# groups: []

# Example:

# - group1@example.com

# - group2@example.com

# Default configuration, to be overridden

configFile: |-

email_domains = [ "*" ] # (1)!

upstreams = [ "http://kubernetes-dashboard" ] # (2)!

- Accept any emails passed to us by the auth provider, which we fully control. You might do this differently if you were using an auth provider like Google or GitHub

- Set

upstreams[]to match the backend service you want to protect, in this case, the kubernetes-dashboard service in the same namespace. 1

Set ExtraArgs

Take note of the following, specifically:

extraArgs:

provider: oidc

provider-display-name: "Authentik"

skip-provider-button: "true"

pass-authorization-header: "true" # (1)!

redis-connection-url: "redis://redis-master" # if you want to use redis

session-store-type: redis # alternative is to use cookies

cookie-refresh: 15m

- This is critically important, and is what makes OAuth2 Proxy suited to this task. We need the authorization headers produced from the OIDC transaction to be passed to Kubernetes Dashboard, so that it can interact with kube-apiserver on our behalf.

Setup Ingress

Now you'll need an ingress, but note that this'll be the ingress you'll want to use for the Kubernetes Dashboard, so you'll want something like the following:

ingress:

enabled: true

className: nginx

path: /

# Only used if API capabilities (networking.k8s.io/v1) allow it

pathType: ImplementationSpecific

# Used to create an Ingress record.

hosts:

- kubernetes-dashboard.example.com

Install OAuth2 Proxy!

Commit the changes to your flux repository, and either wait for the reconciliation interval, or force a reconcilliation using flux reconcile source git flux-system. You should see the kustomization appear...

~ ❯ flux get kustomizations oauth2-proxy

NAME READY MESSAGE REVISION SUSPENDED

oauth2-proxy True Applied revision: main/70da637 main/70da637 False

~ ❯

The helmrelease should be reconciled...

~ ❯ flux get helmreleases -n kubernetes-dashboard oauth2-proxy

NAME READY MESSAGE REVISION SUSPENDED

oauth2-proxy True Release reconciliation succeeded v6.18.x False

~ ❯

And you should have happy pods in the kubernetes-dashboard namespace:

~ ❯ k get pods -n kubernetes-dashboard -l app.kubernetes.io/name=oauth2-proxy

NAME READY STATUS RESTARTS AGE

oauth2-proxy-7c94b7446d-nwsss 1/1 Running 0 5m14s

~ ❯

Is it working?

Browse to the URL you configured in your ingress above, and confirm that you're prompted to log into your OIDC provider.

Summary

What have we achieved? We're half-way to getting Kubernetes Dashboard working against our OIDC-enabled cluster. We've got OAuth2 Proxy authenticating against our OIDC provider, and passing on the auth headers to the upstream.

Summary

Created:

- OAuth2 Proxy on Kubernetes, running and ready pass auth headers to Kubernetes Dashboard

Next:

- Deploy [[Kubernetes Dashboard][k8s/dashboard]][kuberetesdashboard], protected by the upstream to OAuth2 Proxy

Chef's notes 📓

-

While you might, like me, hope that since

upstreamsis a list, you might be able to use one OAuth2 Proxy instance in front of multiple upstreams. Sadly, the intention here is to split the upstream by path, not to provide entirely different upstreams based FQDN. Thus, you're stuck with one OAuth2 Proxy per protected instance. ↩

Tip your waiter (sponsor) 👏

Did you receive excellent service? Want to compliment the chef? (..and support development of current and future recipes!) Sponsor me on Github / Ko-Fi / Patreon, or see the contribute page for more (free or paid) ways to say thank you! 👏

Employ your chef (engage) 🤝

Is this too much of a geeky PITA? Do you just want results, stat? I do this for a living - I'm a full-time Kubernetes contractor, providing consulting and engineering expertise to businesses needing short-term, short-notice support in the cloud-native space, including AWS/Azure/GKE, Kubernetes, CI/CD and automation.

Learn more about working with me here.

Flirt with waiter (subscribe) 💌

Want to know now when this recipe gets updated, or when future recipes are added? Subscribe to the RSS feed, or leave your email address below, and we'll keep you updated.