Nitter on Docker Swarm

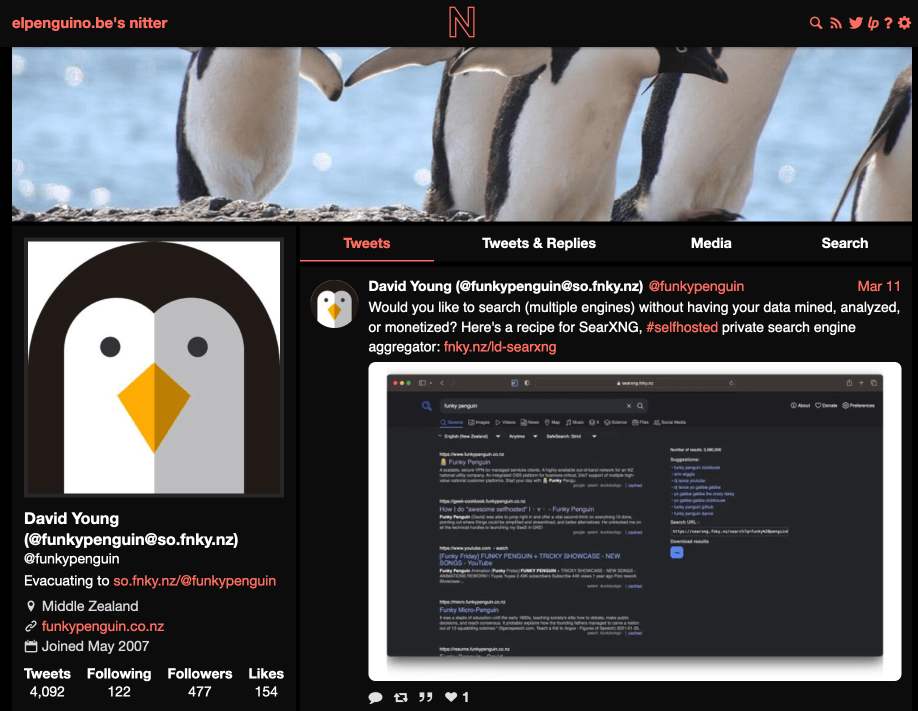

Are you becoming increasingly wary of Twitter, post-space-Karen? Try Nitter, a (read-only) private frontend to Twitter, supporting username and keyword search, with geeky features like RSS and theming!

But what about Twitter's API Developer rules?

In a GitHub issue querying whether Nitter would be affected by Twitter's API schenanigans, developer @zedeus pointed out that Nitter uses an unofficial API, and responded concisely:

I'm not bound by that developer agreement, so whatevs.

*micdrop*

Nitter is a free and open source alternative Twitter front-end focused on privacy and performance, with features including:

No JavaScript or ads

All requests go through the backend, client never talks to Twitter

Prevents Twitter from tracking your IP or JavaScript fingerprint

Uses Twitter's unofficial API (no rate limits or developer account required)

Lightweight (for @nim_lang, 60KB vs 784KB from twitter.com)

RSS feeds, Themes, Mobile support (responsive design)

Nitter Requirements

Ingredients

Already deployed:

- Docker swarm cluster with persistent shared storage

- Traefik configured per design

- DNS entry for the hostname you intend to use (or a wildcard), pointed to your keepalived IP

Related:

- Traefik Forward Auth or Authelia to secure your Traefik-exposed services with an additional layer of authentication

Preparation

Setup data locations

First we create a directory to hold the data which Redis will cache for nitter:

mkdir /var/data/nitter

Create config file

Nitter is configured using a flat text file, so create /var/data/config/nitter/nitter.conf from the example at in the repo, and then we'll mount it (read-only) into the container, below. Here's what it looks like, if you'd prefer a copy-paste (the only critical setting is redisHost be set to redis)

[Server]

address = "0.0.0.0"

port = 8080

https = true # disable to enable cookies when not using https

httpMaxConnections = 100

staticDir = "./public"

title = "example.com's nitter"

hostname = "nitter.example.com"

[Cache]

listMinutes = 240 # how long to cache list info (not the tweets, so keep it high)

rssMinutes = 10 # how long to cache rss queries

redisHost = "redis" #(1)!

redisPort = 6379

redisPassword = ""

redisConnections = 20 # connection pool size

redisMaxConnections = 30

# max, new connections are opened when none are available, but if the pool size

# goes above this, they're closed when released. don't worry about this unless

# you receive tons of requests per second

[Config]

hmacKey = "imasecretsecretkey" # random key for cryptographic signing of video urls

base64Media = false # use base64 encoding for proxied media urls

enableRSS = true # set this to false to disable RSS feeds

enableDebug = false # enable request logs and debug endpoints

proxy = "" # http/https url, SOCKS proxies are not supported

proxyAuth = ""

tokenCount = 10

# minimum amount of usable tokens. tokens are used to authorize API requests,

# but they expire after ~1 hour, and have a limit of 187 requests.

# the limit gets reset every 15 minutes, and the pool is filled up so there's

# always at least $tokenCount usable tokens. again, only increase this if

# you receive major bursts all the time

# Change default preferences here, see src/prefs_impl.nim for a complete list

[Preferences]

theme = "Nitter"

replaceTwitter = "nitter.example.com" #(2)!

replaceYouTube = "piped.video" #(3)!

replaceReddit = "teddit.net" #(4)!

proxyVideos = true

hlsPlayback = false

infiniteScroll = false

- Note that because we're using docker swarm, we can simply use

redisas the target redis host - Set this to your Nitter URL to have Nitter rewrite twitter.com links in tweets to itself

- If you've setup Invidious, then you can have Nitter rewrite any YouTube URLs to your Invidious instance

- I don't know what Teddit is (yet), but I assume it's a private Reddit proxy, and I hope that it has a teddy bear as its logo!

Nitter Docker Swarm config

Create a docker swarm config file in docker-compose syntax (v3), something like the example below:

Fast-track with premix! 🚀

I automatically and instantly share (with my sponsors) a private "premix" git repository, which includes necessary docker-compose and env files for all published recipes. This means that sponsors can launch any recipe with just a git pull and a docker stack deploy 👍.

🚀 Update: Premix now includes an ansible playbook, so that sponsors can deploy an entire stack + recipes, with a single ansible command! (more here)

version: "3.2" # https://docs.docker.com/compose/compose-file/compose-versioning/#version-3

services:

nitter:

image: zedeus/nitter:latest

volumes:

- /var/data/config/nitter/nitter.conf:/src/nitter.conf:Z,ro

deploy:

replicas: 1

labels:

# traefik

- traefik.enable=true

- traefik.docker.network=traefik_public

- traefik.http.routers.nitter.rule=Host(`nitter.example.com`)

- traefik.http.routers.nitter.entrypoints=https

- traefik.http.services.nitter.loadbalancer.server.port=8080

networks:

- internal

- traefik_public

redis:

image: redis:6-alpine

command: redis-server --save 60 1 --loglevel warning

volumes:

- /var/data/nitter/redis:/data

networks:

- internal

networks:

traefik_public:

external: true

internal:

driver: overlay

ipam:

config:

- subnet: 172.16.24.0/24

Note

Setup unique static subnets for every stack you deploy. This avoids IP/gateway conflicts which can otherwise occur when you're creating/removing stacks a lot. See my list here.

Shouldn't we back up Redis?

I'm not sure exactly what Redis is used for, but Nitter is read-only, so what's to back up? I expect that Redis is simply used for caching, so that content you've already seen via Nitter can be re-loaded faster. The --save 60 1 argument tells Redis to save a snapshot every 60 seconds, so it's probably not a big deal to just back up Redis's data with your existing backup of /var/data (you do backup your data, right?)

Serving

Launch Nitter!

Launch the Nitter stack by running docker stack deploy nitter -c <path -to-docker-compose.yml>, then browse to the URL you chose above, and you should be able to start viewing / searching Twitter privately and anonymously!

Now what?

Now that you have a Nitter instance, you could try one of the following ideas:

Setup RSS feeds for users you enjoy following, and read their tweets in your RSS reader

Setup RSS feeds for useful searches, and follow the search results in your RSS reader

Use a browser add-on like "libredirect", to automatically redirect any links from twitter.com to your Nitter instance

Summary

What have we achieved? We have our own instance of Nitter1, and we can anonymously and privately consume Twitter without being subject to advertising or tracking. We can even consume Twitter content via RSS, and no unhinged billionaires can lock us out of the API!

Summary

Created:

- Our own Nitter instance, safe from meddling

billionaires!

Chef's notes 📓

-

Since Nitter is private and read-only anyway, this recipe doesn't take into account any sort of authentication using Traefik Forward Auth. If you wanted to protect your Nitter instance behind either Traefik Forward Auth or Authelia, you'll just need to add the appropriate

traefik.http.routers.nitter.middlewareslabel. ↩

Tip your waiter (sponsor) 👏

Did you receive excellent service? Want to compliment the chef? (..and support development of current and future recipes!) Sponsor me on Github / Ko-Fi / Patreon, or see the contribute page for more (free or paid) ways to say thank you! 👏

Employ your chef (engage) 🤝

Is this too much of a geeky PITA? Do you just want results, stat? I do this for a living - I'm a full-time Kubernetes contractor, providing consulting and engineering expertise to businesses needing short-term, short-notice support in the cloud-native space, including AWS/Azure/GKE, Kubernetes, CI/CD and automation.

Learn more about working with me here.

Flirt with waiter (subscribe) 💌

Want to know now when this recipe gets updated, or when future recipes are added? Subscribe to the RSS feed, or leave your email address below, and we'll keep you updated.