Security in depth / zero trust is a 100% (awesome) PITA

Today I spent upwards of half my day deploying a single service into a client's cluster. Here's why I consider this to be a win...

Here's how the process went:

- Discover 4-year-old GitHub repo containing the exact tool we needed (a prometheus exporter for FoundationDB metrics)

- Attempt to upload the image into our private repo, running Harbor with vulnerability scanning via Trivy enforced. Discover that it has 1191 critical CVEs, upload is blocked.

- Rebuild image with the latest node, 4 CVEs remain. CVEs are manually whitelisted1. Image can now be added to repo.

- Image must be signed using cosign on both the dev and prod infrastructure (separate signing keys are used). Connaisseur prevents unsigned images from being run in any of our clusters2.

- Image is in the repo, now to deploy it... add a deployment template to our existing database helm chart. Deployment pipeline (via Concourse CI) fails while kube-scoring / kube-conforming the generated manifests, because they're missing the appropriate probes and securityContexts

- Note that if we had been able to sneak a less-than-secure deployment past kube-score's static linting, then Kyverno would have prevented the pod from running!

- Fixed all the invalid / less-than-best-practice elements of the deployment. Ensure resource limits, HPAs, securityContexts are applied.

- Manifest deploys (pipeline is green!), pod immediately crashloops (it's not very obtuse code!)

- Examine Cilium's Hubble, determine that the pod is trying to talk to FoundationDB (duh), and being blocked by default.

- Apply the appropriate labels to the deployment / pod to align with the pre-existing regime of Cilium NetworkPolicies permitting ingress/egress to services based on pod labels (thanks Monzo!)

- No more dropped sessions in Hubble! But pod still crashloops. Apply an Istio AuthorizationPolicy to permit mTLS traffic between the exporter and FoundationDB.

- Now the exporter can talk to FoundationDB! But no metrics are being gathered.. why?

- Apply another update to a separate policy helm chart (which only contains CiliumNetworkPolicy manifests), permitting the cluster Prometheus access to the exporter on the port it happens to prefer.

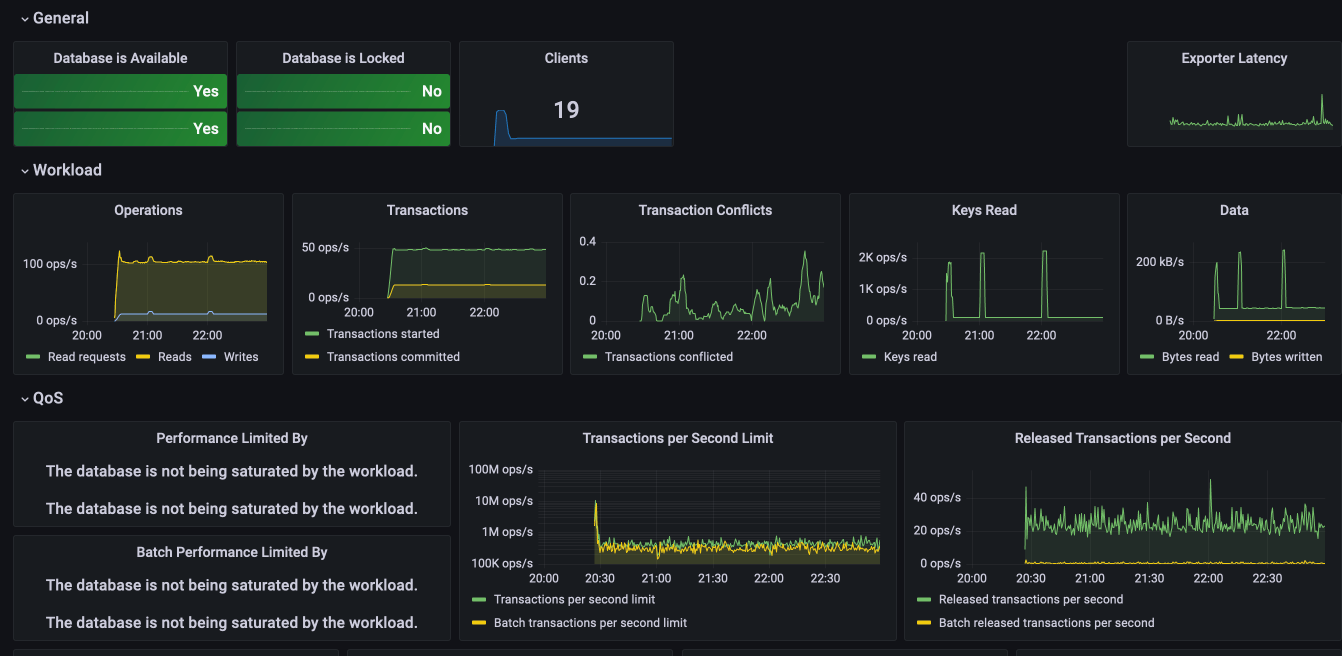

Finally, I am rewarded with metrics scraped by Prometheus, and exposed in the Grafana dashboard:

Note

It's especially gratifying to note that while all these schenanigans were going on, the existing services running in our prod and dev namespaces were completely isolated and unaffected. All changes happened in a PR branch, for which Concourse built a fresh, ephemeral namespace for every commit.

Why is this a big deal?

I wanted to highlight how many levels of security / validation we employ in order to introduce any change into our clusters, even a simple metrics scraper. It may seem overly burdensome for a simple trial / tool, but my experience has been that "temporary is permanent", and the sooner you deploy something properly, the more resilient and reliable the whole system is.

Do you want to be a PITA too?

This is what I love doing (which is why I'm blogging about it at 11pm!). If you're looking to augment / improve your Kubernetes layered security posture, hit me up, and let's talk business!

Chef's notes 📓

Tip your waiter (sponsor) 👏

Did you receive excellent service? Want to compliment the chef? (..and support development of current and future recipes!) Sponsor me on Github / Ko-Fi / Patreon, or see the contribute page for more (free or paid) ways to say thank you! 👏

Employ your chef (engage) 🤝

Is this too much of a geeky PITA? Do you just want results, stat? I do this for a living - I'm a full-time Kubernetes contractor, providing consulting and engineering expertise to businesses needing short-term, short-notice support in the cloud-native space, including AWS/Azure/GKE, Kubernetes, CI/CD and automation.

Learn more about working with me here.

Flirt with waiter (subscribe) 💌

Want to be alerted to new posts / recipes? Subscribe to the RSS feed, or leave your email address below, and we'll keep you updated.